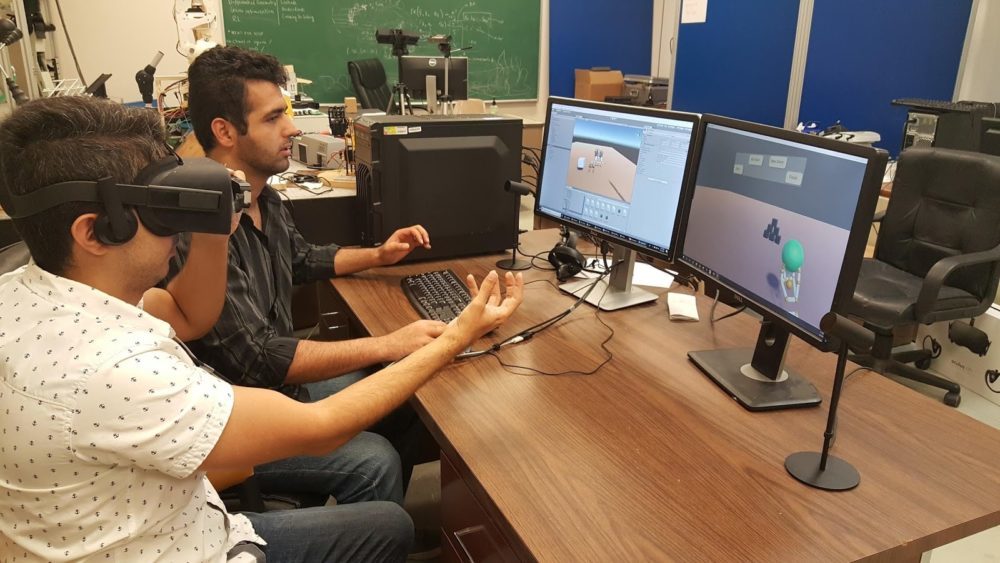

(Above photo: Robotics LAB of ISU, Graduate student Omid Heidari, testing the designed virtual reality systems with healthy subjects)

My interest in Virtual/Augmented Reality technology began when I started working as a research assistant on a project called “ARWED” at Idaho State University. Currently, I am a PhD student at ISU majoring in Mechanical Engineering with a focus on Rehabilitation Robotics and Exoskeletons. The goal of the aforementioned project was to see how the brain reacts to reflected virtual hands, otherwise known as therapeutic intervention. Based on previous research, if a user observes an action, the same area of his/her brain is activated as though they were physically performing said action; a mirror neuron system. What could be better than a virtual environment that fully immerses the user? We can send edited signals to the brain through eyes by immersing the user in a controlled virtual environment.

From left, Omid Heidari ( ME Student), Alba Perez-Gracia (ME Chair), Nancy Devine (Associate Dean of SRCS), Vahid Pourgharibshahi (ME Student), and Marco Schoen (ME Professor)

I was responsible for the engineering portion of this physical therapy based project. Through this project I had the chance to learn Unity as a platform to work with Virtual Reality systems and implement the Leap Motion camera to bring real hands to the virtual world. Having completed the engineering part, the project is now in the phase of being appropriately experimented and analyzed by ISU’s Physical Therapy department. These experiments which use both healthy and stroke afflicted individuals are on-going and will hopefully soon yield the final results.

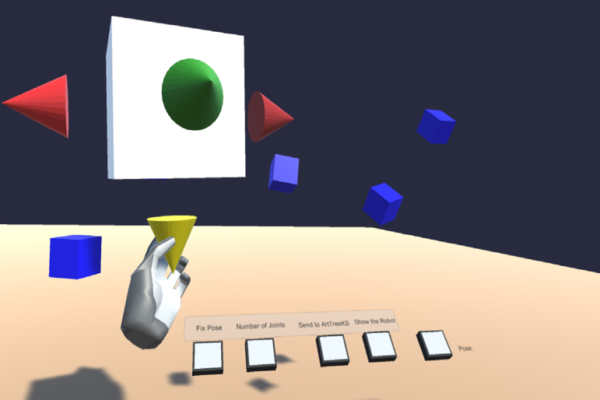

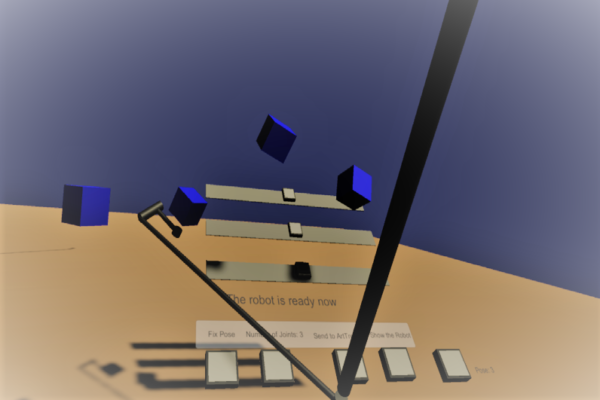

This project opened the door to the VR/AR world for me, a new technology where the virtual environment and virtual objects are used to expand human perception of the world. As a student working in the Robotics LAB, I became very interested in investigating VR/AR applications in the Robotics industry. I have made a VR application whereby Robot Kinematics Synthesis can be performed. The only knowledge that the user needs to have to design a robot is the poses that the robot end-effector is supposed to pass through. In this sense, the user can be anybody regardless of the level of their knowledge in Robotics, it is basically a VR game now instead of a graduate-level problem. The application uses ArtTreeKS to solve the kinematics equations in the background and Leap Motion to get the inputs from the user’s real hands. The practical applications of such technology are by no means exhausted. As I was learning about and investigating uses of AR/VR I was aware of additional projects that could benefit from such technology.

I have had more VR experiences than AR but in obtaining an internship at House Of Design I got the chance to investigate the applications of AR technology in Robotics more fully. The leadership of HOD stays current and aware of new technologies (such as AR/VR) for practical application in their robotic systems so as to enhance efficiency and quality.

There are already some ideas about using AR in the industry, such as remote assistance and maintenance of the systems. AR technology can give the support team of a company the ability of remotely assisting customers. Also, the maintenance team can get all the information of a system by using AR technology to detect the system and pull up the 3D model, worksheets, and all the information of the industrial parts as well as possible tutorials or disassembly instructions. After reviewing many SDKs available for AR, I ended up implementing MAXST which is one of the new ones in the field. One of the good features of this SDK is using SLAM (simultaneous Localization And Mapping) to do spatial mapping of the environment. Using this method, we do not need special hardware and cameras to do the mapping. Moreover, the ability of this kit to detect 2D images quickly and precisely provides a good platform to identify the system and get information.

In pursuing AR applications, we came up with another idea where AR is effectively exploited to ease the debugging process for robotic programmers. The purpose of this feature is to display the running code blocks and their outputs right in front of the system so that the programmer can see the results of the changes in their code faster.

This mobile application is still in testing phase. My future and ultimate goal is to replace current tools that are being used to interact with robots with an AR alternative. This will ease the design process, robot training and debugging for robot programmers. We hope we can accomplish this through a collaboration between HOD and ISU in the near future.

If you would like to connect with Omid, he can be reached at heidomid(at)isu.edu.

Recent Comments